iDialogue AI Prompt Engineering

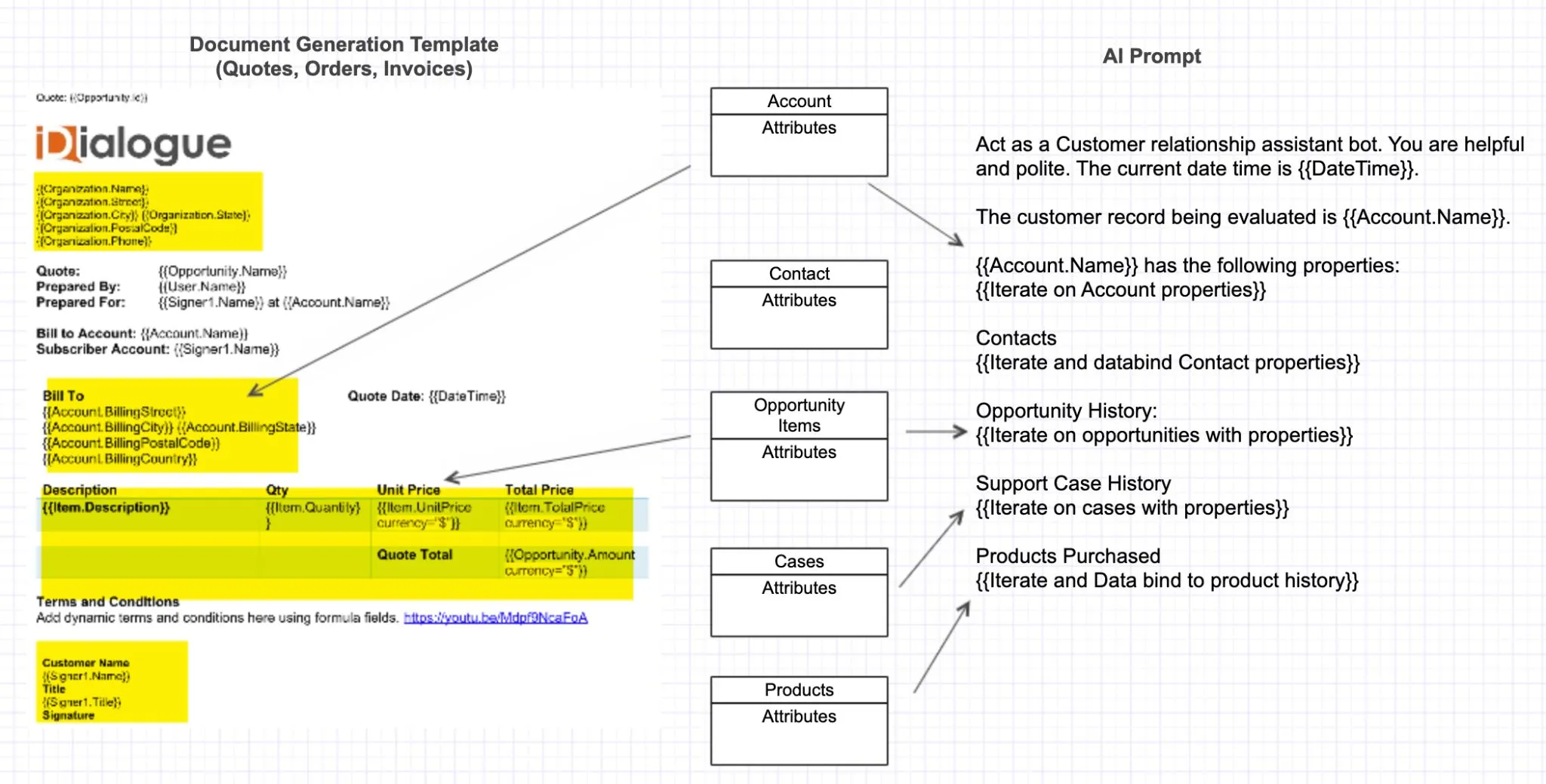

“Prompt Engineering” is the art and science of providing an AI Assistant with sufficient guidance and context to accomplish it’s objective(s).

The practice of generating a prompt is very similar to document generation. Both approaches merge data from multiple sources into a single document.

In the case of AI prompts, the document is simply a “text file” containing system and conversation context.

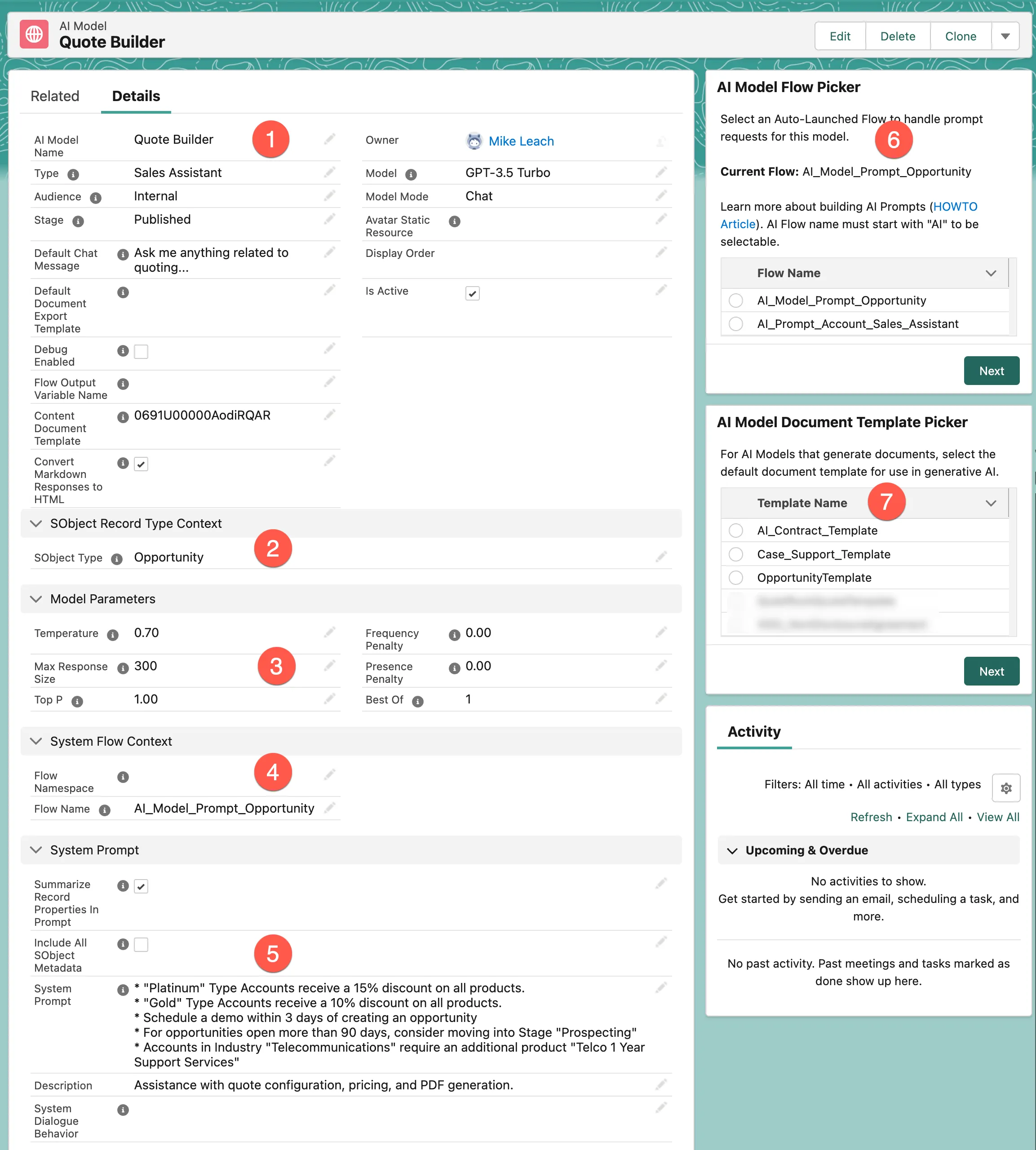

AI Model

Core to every AI Assistant is the “Model”. Elements of a model include:

- Model name, avatar, default prompt, and other high-level parameters

- SObject type. The full developer API name is required.

- OpenAI model parameters. Use the OpenAI Playground to explore capabilities and behaviors.

- Defines an auto-launched flow to be invoked that creates the default system prompt for a conversational dialogue session.

- System prompt may be used to provide additional context for the model.

- Select an auto-launched flow (flow name must start with “AI_” to be displayed).

- Select a default document template for use in generative document AI prompts.

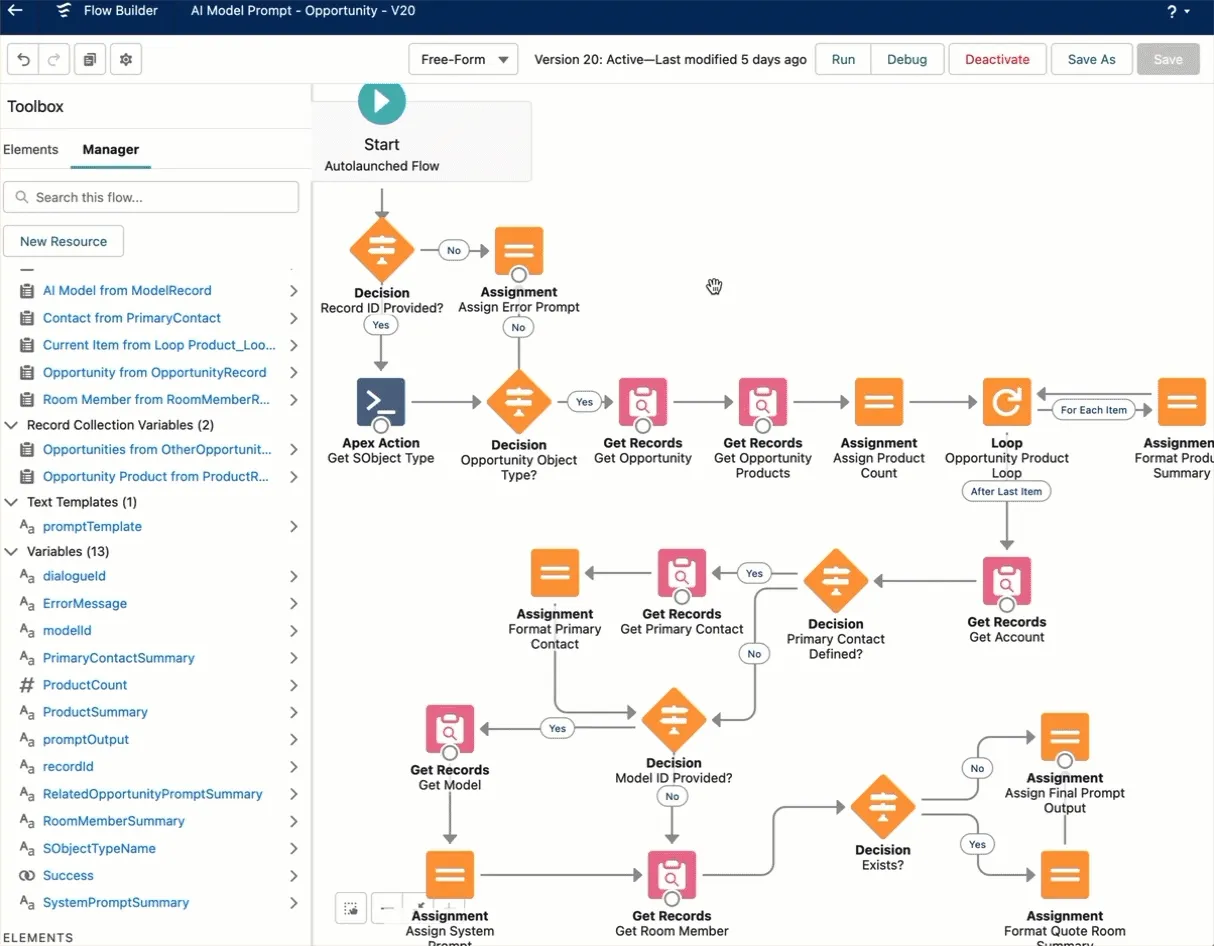

FlowGPT

iDialogue utilizes “Auto-Launched” flows for prompt engineering and AI orchestration. The flow name must start with “AI_” to be included in the list of available flows (in step 6 above).

FlowGPT flows are provided several input variables to determine conversation context.

Flows may optionally initialize the system prompt upon first message in a Dialogue, or override the assistant response.

Flow Inputs

recordId(Text)dialogueId(Text)modelId(Text)userPrompt(Text)

FlowGPT Developers should check all input variables for NULL before use.

A recordId will always be provided when AI Assistant is utilized on a page layout. But may be NULL when utilized at global scope; in which case the flow makes no assumptions about record context.

dialogueId is generated by the Dialogue API. If a dialogueId is not provided, then the flow must assume this is the first message in the dialogue, and execute any system prompt initialization flows.

Since prompt intialization can be compute and time intensive, init flows should be executed only once per Dialogue session. Subsequent chat requests will provide a valid dialogueId which can be utilized to determine actionable intent, moderation, and similarity searches for branching conditions.

The modelId is always provided and is never null. Storing AI behaviors on the model is useful for making many smaller, declarative changes to the model without updating a flow. Static system prompt context may also be stored on the Model record.

The userPrompt input variable provides the user’s chat message. The flow handler may interpret the user’s intent using methods such as the Similarity Search Action, and conditionally branch, or return a custom assistantResponse (output) based on the userPrompt.

Flow Outputs

The Dialogue API supports the definition of 2 output variables.

systemPrompt(Text)assistantResponse(Text)

systemPrompt contains all the system level text required to support a conversation. For example, a Quote Builder bot needs context about the Opportunity, related line items, Products, Account, and any other relevant records.

The system prompt is typically only generated once upon the first message in a dialogue conversation (determined by checking if input dialogueId is null).

assistantResponse - When this variable is left blank/null then the userPrompt is forwarded to the AI for response. Flows may intercept user prompts and respond with a specific assistantResponse, particularly when actions are executed. The assistant response should generally use the same persona of the AI model.

Prompt Text Templates

It’s recommended to use a “Text Template” type in flows for dynamically assembling the prompt, then assign the text template to the systemOutput as the last element in a flow.

Flow formula fields are useful within loops that iterate over a collection record. The formatting of each record handled by a formula field and assigned to a summary text field.